I compared deploy speeds for Reflame, Vercel, Netlify, Cloudflare Pages on the same repo

Biggest takeaway: Reported deploy speeds might not paint the full picture

Update Nov 12, 2022

Matt Kane from Netlify replied to my Twitter thread explaining that:

1) Part of the issue here is my Netlify account had branch builds enabled, which resulted in two builds running for every commit. This resulted in longer queue times with a concurrency level of 1, which would explain about ~20s of the queuing time seen here to run a branch build of similar length.

2) The other part is Netlify's previews run on PR creation, not on every commit like on all the other services tested here. This explains another ~5s of delay between creating the branch and opening the PR.

That unfortunately still leaves ~30s of the total ~55s of queuing time above unaccounted for. Initial tests with branch builds turned off show results that are much more competitive, but it was performed on 4AM on a Sunday, so I can't conclusively rule out additional queuing delays during busier hours yet. Will be investigating further during PST business hours. Stay tuned for another update!

Update Nov 14, 2022

Shorter Twitter thread version of this update if you're busy: twitter.com/lewisl9029/status/1592341968780..

I reran the original test with Netlify branch builds disabled at 2:20PM PST on a Monday, to investigate queuing behavior during times of higher traffic.

Here's the recording:

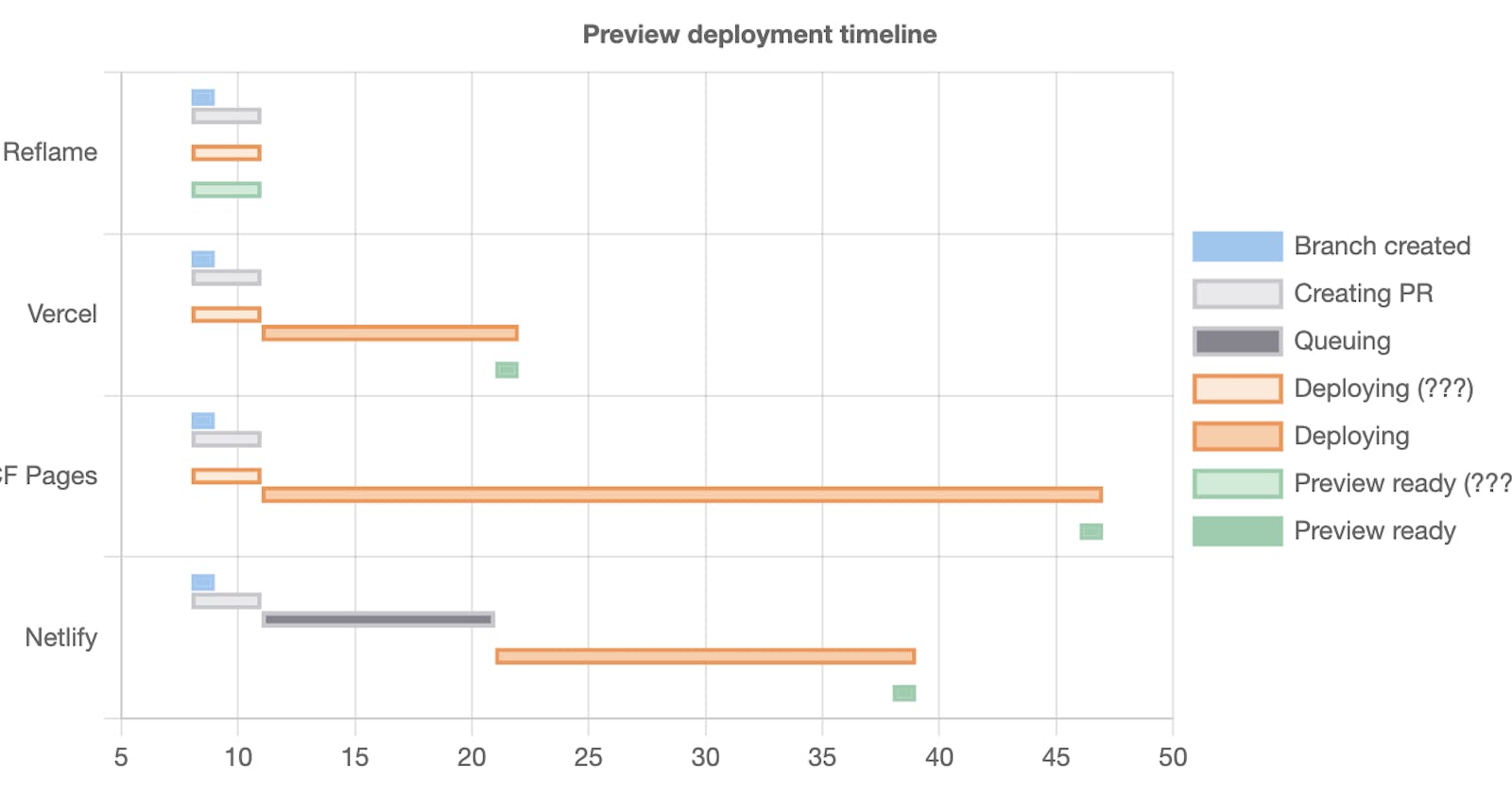

Here's an updated chart based on these new timestamps:

We can see that Netlify is now much more competitive without the double builds from enabling branch deploys, finishing 2nd place, beating Cloudflare Pages by ~8s.

However, queuing time at ~10s is still measurably higher compared to the other services tested, and contributed the majority of the ~17s difference between Netlify and 2nd place Vercel. Even if we remove the ~3s head start others enjoyed due to starting at commit instead of at PR creation, it would still end up at ~14s behind.

This queuing time at ~10s with a single PR preview deploy also gives me a much stronger hypothesis for the composition of our earlier result of ~55s queuing time with the double build from enabling branch deploys:

- ~10s queue time for initial branch deploy

- ~15-20s build time for initial branch deploy

- ~10s queue time for subsequent PR deploy

- ~15-20s build time for subsequent PR deploy

This adds up to 50s-60s when performed sequentially with a concurrency level of 1, which matches perfectly with what we previously observed.

I've updated the subtitle to something a bit less inflammatory in an attempt to reflect the fact that the queuing time for most users is likely not going to be as egregious as I previously experienced. For full transparency, this is what it was previously:

Biggest takeaway: Netlify might be lying to you about their deploy speed

Here's what I updated it to:

Biggest takeaway: Reported deploy speeds might not be painting the full picture

Feedback for Netlify

With all that said, here are some feedback for Netlify based on my experience comparing all of these deployment services thus far:

Enabling branch deploys shouldn't result in double builds on branches with PRs

The branch deploys option currently significantly increases queue times and costs for PR deploys. A gigantic footgun, and completely counterintuitive to how I expected the option to work, and I suspect I'm not the only one who casually enabled it without realizing its negative effects.

Every other deployment service tested deploys every commit on every branch by default, and just reuses those commit deploys for PR previews, with 0 opportunity for double builds.

I don't necessarily expect Netlify to change their current defaults since there is tradeoff in costs involved with building all commits vs only those on branches with associated PRs, and it's fully up to them to decide which side of that tradeoff would benefit the most users.

I would however like to see an option to enable the deploy every commit on every branch behavior, because it can result in significantly faster deploy speeds for newly pushed branches, sometimes even making previews ready by the time we create the PR.

Branch deploys could have been this option, but the double builds on PRs issue makes it unsuitable for any use case outside of maintaining long-lived non-default branch previews (for staging, testing, etc).

Would be great to see this addressed, either by updating the behavior of the existing option, or by introducing a new option if there's a need to preserve the existing behavior (I've been failing to think of any scenarios where the current behavior would be preferable, but that could be just a lack of imagination on my part).

Queuing times during high traffic hours needs improvement

As we've seen here, Netlify's actual build speeds are actually within spitting distance of Vercel's, but significant time spent queuing makes it uncompetitive from the perspective of a real-world user.

From my limited testing so far, queue times seem to be much better during hours with low traffic (last tested ~4AM PST on a Sunday), so I'd wager this is a capacity problem that Netlify may be able to throw more money at to improve. But I don't have the full picture here, so maybe it's not that simple.

Make queuing times more transparent and readily accessible

Ideally, every deployment service should do this in the interest of transparency, but during my testing, Netlify is the only one I've found to suffer from queuing times to a significant enough degree to sorely need it. For everything else, queuing time has been a rounding error compared to total deploy duration.

One could argue that if Netlify improved queuing times enough to match other services tested, then this could become unnecessary. Will leave it up to them to decide which to tackle first.

There's even a profit argument in favor of providing better insight into queue times for providers like Netlify that sell higher concurrency limits (at least if your queue times for builds you have spare concurrency for are competitive). I'm sure this would come in handy for capacity planning, i.e. deciding how much concurrency to buy to make the best tradeoff between costs and team productivity.

That said, this is only a concern for everything tested here besides Reflame, because we don't artificially limit concurrency based on how much you pay us. We can afford to do this precisely because we've built the tech to only have to dedicate milliseconds of compute to each deploy as opposed to 10s of seconds, so we can run these deploys on a small handful of simple multitenant web servers instead of an elaborate compute cluster with tons of scheduling, queueing, and startup overhead, and squeeze 100x more deploys on the same hardware compared to every other service.

This 100x better tech, combined with simple, flat monthly price per user pricing with no BS is what ensures Reflame's incentives are aligned with the interests of our customers. We are always incentivized to make deploys faster for you, so we can pocket more revenue as profit.

That concludes all of the updates I had planned. What follows is the original article, please read it with a generous helping of salt considering the results for Netlify were heavily skewed by the double build problem.

I also made an abridged version of this post as a Twitter thread. Check it out if you just want an executive summary.

HN discussions here: news.ycombinator.com/item?id=33576753

The motivation

It's been about a month since Reflame's launch on Show HN. Before the launch, all we had on Reflame were small hobby projects (and reflame.app itself, which was still on the small side, on the order of hundreds of modules). These days, I'm starting to see larger and larger projects pop up, which is exciting! But scary at the same time.

While Reflame's big-picture architecture is designed to be able to deploy updates with millisecond latencies for projects of arbitrary size, it's missing a bunch of important micro-optimizations that can compound catastrophically for a large enough project (think too much heavy compute running on a single node, running out of memory/temp disk space, bumping into third-party rate limits, etc). This is one of the many things that has very literally been keeping me awake at night.

So, I've been working on this since the previous update.

The setup

To start with, I've set up a new deployment-benchmark repo using our shiny new 1-click app creation feature:

A brand new repo with React, Vite, and Reflame fully set up and deployed in under 30 seconds! AFAIK this is the fastest way to create and deploy a production-ready Vite React repo on the internet. Give it a try yourself the next time you're starting a new project!

The repo works out of the box with both Vercel and Netlify, so all you need to do is to add the repo through their dashboards to start deploying with those simultaneously, if you want to do your own comparisons before deciding. Cloudflare Pages requires slightly more setup, more on this later.

There's a lot more benchmarking and optimizations I still have to do over the next few weeks and months, but before moving on to more elaborate setups, I thought it would be useful to first take some time to establish a baseline deployment speed for this repo while it's still a tiny, near-stock Vite React app with <10 source files total.

To keep myself honest about which scenarios Reflame shines and where it falls short, I've also connected a few other deployment services for client-rendered React apps that folks might already be using. To start with, I've added Vercel, Netlify, and Cloudflare Pages.

Let me know in the comments if there are others you'd like to see! Anything that can deploy a stock Vite React app should be fair game.

The results

Without further ado, here's an unedited screen recording of me creating a new PR and waiting for all the preview deploys to finish:

If you're patient enough to want to watch the whole thing, I highly recommend watching at 2x speed. It'll take a while.

Alternatively, keep scrolling for a pretty chart, and some commentary on the results.

I realize this is not very scientific since we're only looking at a single run, but I've repeated this dozens of times over the past few weeks and have not seen any variations significant enough to materially affect the commentary here. Over the next few weeks/months I plan on evolving the repo to conduct more sophisticated and statistically rigorous benchmarks on an ongoing basis to use as health checks and detect performance regressions. Keep an eye out for updates on this blog!

I'd also like to invite anyone curious enough to try reproducing the results here themselves by creating a new Vite React repo and connecting all of these deployment services to it. Shameless plug: easiest way to create a repo for this purpose is through Reflame's 1-click app creation feature. The entire process shouldn't take longer than 30 minutes if you follow this route.

The details

I made the chart above using rough timestamps in the video to help visualize how long each deploy ends up taking, and the various components involved.

🥇 Reflame

The results for Reflame here are not all that interesting, because it finished well before I could even finish creating the PR to see status checks. The self-reported latency is 1.071s, but it's impossible to measure in any further granularity from the video alone.

That said, what we're looking to measure with this exercise is the latency experienced by a real world user, so this result is fast enough to be considered instant for that purpose (I have a lot more to say about this at the end). This shouldn't come as a surprise to anyone who's been following along. This is Reflame's bread and butter, after all.

Results for the other services, however, are actually a lot more interesting than I expected them to be. Let's go through each, ordered by their ranking.

🥈 Vercel

Vercel came in second place, at an end to end deployment latency of about 16 seconds from when the branch was created.

This is actually a very respectable showing considering Vercel is just cloning the repo and running Vite in a container/VM. This is exactly what Netlify and Cloudflare Pages does as well, so in theory there isn’t actually that much room for differentiation between these three services. The fact that there was such a dramatic difference between them in practice came at a huge surprise to me, and I'll be diving into the major contributors in the later sections.

Even though we're deploying a Vite app here, Reflame does not actually run Vite anywhere in its deployment pipeline, unlike everything else being compared here. Actually running Vite requires spinning up a container/VM, running

git clone,npm install,vite build, and then finally deploying the result. Each of these operations have latency floors on the order of whole digit seconds, which would make the kinds of latencies Reflame targets completely impossible.Instead, Reflame fetches only the modules that have actually changed, performs a minimal transform on them to get rid of JSX and TypeScript syntax, and deploys them as independent ES modules. We then sprinkle some custom dependency analysis and aggressive prefetching on top to flatten the module loading waterfall, in order to keep initial load performance reasonable while optimizing for minimal write-amplification (key to tapering the normally linear scaling of deployment speed with codebase size) and maximum cache granularity.

Cache granularity in particular is arguably more important than initial load performance for the kinds of apps Reflame is intended for (think product dashboards with no publicly indexible content that people repeatedly log into), yet it has been largely ignored by the industry in favor of chasing higher Lighthouse scores, which is optimized for measuring performance for initial visits. I'll have more to say on this topic in a later post.

Before I move on though, I wanted to note that, if you take a look at the screen recording, you can see I actually didn't realize the deploy was finished until much later, because of a bug with the GitHub UI failing to update the status check in time. We can see the comment at the top of the screen update at around the 30 second mark, but I was tunnel-visioning hard on the status checks and didn't realize until about 10 seconds later.

Chances are, we've all probably seen this happen on GitHub before. Vercel is obviously not at fault here, so I used the timestamp of the comment appearing to calculate deployment time for the purpose of this exercise. However, when this happens in the real world, it invariably results in a crappy user experience, regardless of who's at fault.

This can happen when creating a new PR for any deployment service on GitHub, except Reflame, because Reflame deploys are practically guaranteed to always finish deploying before any human can finish creating a PR from their branch (in fact, I wouldn't be surprised if the GitHub API calls involved here alone would take longer than a Reflame deploy, even before taking the human bottleneck into account). Reflame status checks on PR creation will be complete on the initial page load, so isn't ever going to be affected by flakey WebSockets updates from GitHub.

Fun fact: I actually had to stop sending the in-progress status check entirely for Reflame. The in-progress and completed status checks were always sent in such quick succession that it sometimes resulted in races that caused the status check to never be marked as complete. Luckily, Reflame deploys so fast that users would practically never see an in-progress status check even if we sent it anyways, so it was fairly safe to omit.

🥉 Cloudflare Pages

Third place was Cloudflare Pages, finishing in about 37 seconds, or about 21 seconds after Vercel.

A quick look into the build logs between Vercel and CF Pages presents some clues as to where the differences might lie:

Here's CF Pages:

2022-10-25T09:50:17.630287Z Cloning repository...

2022-10-25T09:50:18.520063Z From https://github.com/reflame/deployment-benchmark

2022-10-25T09:50:18.520692Z * branch 733417b754cefeff2ff56d88d35ae9fe9f36fb8d -> FETCH_HEAD

2022-10-25T09:50:18.520901Z

2022-10-25T09:50:18.56086Z HEAD is now at 733417b Update App.jsx

2022-10-25T09:50:18.5614Z

2022-10-25T09:50:18.698318Z

2022-10-25T09:50:18.724411Z Success: Finished cloning repository files

2022-10-25T09:50:21.814043Z Installing dependencies

2022-10-25T09:50:21.824443Z Python version set to 2.7

2022-10-25T09:50:24.931283Z Downloading and installing node v16.18.0...

2022-10-25T09:50:25.339846Z Downloading https://nodejs.org/dist/v16.18.0/node-v16.18.0-linux-x64.tar.xz...

2022-10-25T09:50:25.796972Z Computing checksum with sha256sum

2022-10-25T09:50:25.929794Z Checksums matched!

2022-10-25T09:50:30.286701Z Now using node v16.18.0 (npm v8.19.2)

2022-10-25T09:50:30.694917Z Started restoring cached build plugins

2022-10-25T09:50:30.707332Z Finished restoring cached build plugins

2022-10-25T09:50:31.215194Z Attempting ruby version 2.7.1, read from environment

2022-10-25T09:50:34.746392Z Using ruby version 2.7.1

2022-10-25T09:50:35.099867Z Using PHP version 5.6

2022-10-25T09:50:35.251971Z 5.2 is already installed.

2022-10-25T09:50:35.281233Z Using Swift version 5.2

2022-10-25T09:50:35.281962Z Started restoring cached node modules

2022-10-25T09:50:35.297622Z Finished restoring cached node modules

2022-10-25T09:50:35.807495Z Installing NPM modules using NPM version 8.19.2

2022-10-25T09:50:36.217549Z npm WARN config tmp This setting is no longer used. npm stores temporary files in a special

2022-10-25T09:50:36.217889Z npm WARN config location in the cache, and they are managed by

2022-10-25T09:50:36.218149Z npm WARN config [`cacache`](http://npm.im/cacache).

2022-10-25T09:50:36.618472Z npm WARN config tmp This setting is no longer used. npm stores temporary files in a special

2022-10-25T09:50:36.619103Z npm WARN config location in the cache, and they are managed by

2022-10-25T09:50:36.619309Z npm WARN config [`cacache`](http://npm.im/cacache).

2022-10-25T09:50:38.795358Z

2022-10-25T09:50:38.795652Z added 85 packages, and audited 86 packages in 2s

2022-10-25T09:50:38.795817Z

2022-10-25T09:50:38.795937Z 8 packages are looking for funding

2022-10-25T09:50:38.796062Z run `npm fund` for details

2022-10-25T09:50:38.797004Z

2022-10-25T09:50:38.797278Z found 0 vulnerabilities

2022-10-25T09:50:38.807557Z NPM modules installed

2022-10-25T09:50:39.386013Z npm WARN config tmp This setting is no longer used. npm stores temporary files in a special

2022-10-25T09:50:39.386411Z npm WARN config location in the cache, and they are managed by

2022-10-25T09:50:39.386585Z npm WARN config [`cacache`](http://npm.im/cacache).

2022-10-25T09:50:39.405024Z Installing Hugo 0.54.0

2022-10-25T09:50:40.188585Z Hugo Static Site Generator v0.54.0-B1A82C61A/extended linux/amd64 BuildDate: 2019-02-01T10:04:38Z

2022-10-25T09:50:40.192651Z Started restoring cached go cache

2022-10-25T09:50:40.211269Z Finished restoring cached go cache

2022-10-25T09:50:40.358634Z go version go1.14.4 linux/amd64

2022-10-25T09:50:40.37351Z go version go1.14.4 linux/amd64

2022-10-25T09:50:40.376529Z Installing missing commands

2022-10-25T09:50:40.376768Z Verify run directory

2022-10-25T09:50:40.376908Z Executing user command: npm run build

2022-10-25T09:50:40.853109Z npm WARN config tmp This setting is no longer used. npm stores temporary files in a special

2022-10-25T09:50:40.853487Z npm WARN config location in the cache, and they are managed by

2022-10-25T09:50:40.853695Z npm WARN config [`cacache`](http://npm.im/cacache).

2022-10-25T09:50:40.869037Z

2022-10-25T09:50:40.86927Z > example-vite-react@0.0.0 build

2022-10-25T09:50:40.869412Z > vite build

2022-10-25T09:50:40.869531Z

2022-10-25T09:50:41.510958Z vite v3.1.7 building for production...

2022-10-25T09:50:41.547569Z transforming...

2022-10-25T09:50:42.531186Z ✓ 38 modules transformed.

2022-10-25T09:50:42.645557Z rendering chunks...

2022-10-25T09:50:42.651676Z dist/assets/react.35ef61ed.svg 4.03 KiB

2022-10-25T09:50:42.651924Z dist/assets/vite.4a748afd.svg 1.46 KiB

2022-10-25T09:50:42.652091Z dist/assets/icon.2129a660.svg 4.62 KiB

2022-10-25T09:50:42.654023Z dist/index.html 0.44 KiB

2022-10-25T09:50:42.655668Z dist/assets/index.1d2a7c20.css 1.80 KiB / gzip: 0.92 KiB

2022-10-25T09:50:42.660648Z dist/assets/index.c65a176d.js 140.69 KiB / gzip: 45.44 KiB

2022-10-25T09:50:42.694059Z Finished

2022-10-25T09:50:42.694677Z Note: No functions dir at /functions found. Skipping.

2022-10-25T09:50:42.695099Z Validating asset output directory

2022-10-25T09:50:43.554269Z Deploying your site to Cloudflare's global network...

2022-10-25T09:50:48.117372Z Success: Assets published!

2022-10-25T09:50:48.584581Z Success: Your site was deployed!

Here's Vercel:

[02:50:18.866] Cloning github.com/reflame/deployment-benchmark (Branch: edit, Commit: 733417b)

[02:50:19.250] Cloning completed: 383.076ms

[02:50:19.652] Looking up build cache...

[02:50:20.970] Build cache downloaded [11.34 MB]: 1057ms

[02:50:21.009] Running "vercel build"

[02:50:21.507] Vercel CLI 28.4.12-05a80a4

[02:50:22.086] Installing dependencies...

[02:50:22.728]

[02:50:22.729] up to date in 315ms

[02:50:22.729]

[02:50:22.729] 8 packages are looking for funding

[02:50:22.729] run `npm fund` for details

[02:50:22.740] Detected `package-lock.json` generated by npm 7+...

[02:50:22.741] Running "npm run build"

[02:50:23.040]

[02:50:23.040] > example-vite-react@0.0.0 build

[02:50:23.040] > vite build

[02:50:23.041]

[02:50:23.487] [36mvite v3.1.7 [32mbuilding for production...[36m[39m

[02:50:23.525] transforming...

[02:50:24.662] [32m✓[39m 38 modules transformed.

[02:50:24.778] rendering chunks...

[02:50:24.782] [90m[37m[2mdist/[22m[90m[39m[32massets/react.35ef61ed.svg [39m [2m4.03 KiB[22m

[02:50:24.783] [90m[37m[2mdist/[22m[90m[39m[32massets/vite.4a748afd.svg [39m [2m1.46 KiB[22m

[02:50:24.783] [90m[37m[2mdist/[22m[90m[39m[32massets/icon.2129a660.svg [39m [2m4.62 KiB[22m

[02:50:24.784] [90m[37m[2mdist/[22m[90m[39m[32mindex.html [39m [2m0.44 KiB[22m

[02:50:24.785] [90m[37m[2mdist/[22m[90m[39m[35massets/index.1d2a7c20.css [39m [2m1.80 KiB / gzip: 0.92 KiB[22m

[02:50:24.790] [90m[37m[2mdist/[22m[90m[39m[36massets/index.c65a176d.js [39m [2m140.69 KiB / gzip: 45.44 KiB[22m

[02:50:24.833] Build Completed in /vercel/output [3s]

[02:50:25.479] Generated build outputs:

[02:50:25.480] - Static files: 9

[02:50:25.480] - Serverless Functions: 0

[02:50:25.480] - Edge Functions: 0

[02:50:25.480] Deployed outputs in 1s

[02:50:26.134] Build completed. Populating build cache...

[02:50:28.818] Uploading build cache [11.34 MB]...

[02:50:29.688] Build cache uploaded: 870.16ms

[02:50:29.718] Done with "."

The difference in sheer length is what first jumped out to me.

Looking closer, Cloudflare Pages seems to be initializing Ruby, PHP, Python, Swift, in a project that doesn't use anything other than Node.js. It also appears to be downloading Node.js whereas Vercel seems to have it baked into the image.

Adding up the setup steps at the beginning and various other unrelated steps sprinkled throughout (why Hugo???), this seems to be responsible for ~17s of the overall latency. Cutting all of this cruft out would bring latency down to ~20s, which would put CF Pages within spitting distance of Vercel.

CF Pages is still a young product compared to Vercel and Netlify, and based on their track record I have little doubt they will try to optimize this cruft away in due time and become more competitive.

Quick side note: Cloudflare Pages was the only service that required extra configuration to deploy this near-stock Vite project. There was no preset for Vite, initial deploy failed with stock settings, and I had to look up their docs to set it up with the right commands and env vars.

If you're setting this up to verify results for yourself, just make sure to set the build command manually to

npm run build, the build output directory to/dist, and set theNODE_VERSIONenv var to16for it to build correctly.Both Vercel and Netlify were able to deploy the repo as soon as I connected it without any manual configuration.

This is unrelated to our investigation into deployment speed, but I thought I'd flag this anyways in case the folks at Cloudflare are interested in knocking out this quick win.

🐢 Netlify

Last and most disappointingly, we have Netlify.

Netlify performed horrendously here. It didn't even manage to post an in-progress commit status until a good 24s after everything else was already fully deployed. And then took another ~19s seconds to finish after that, totaling ~80s of end to end latency.

Yet the Netlify status check proudly claims a deploy time of 15s. If we took their word for it, this would put them on par with Vercel and Cloudflare. But in reality it wildly misrepresents the end-to-end latency we would actually experience, by a factor of more than 5x.

This is an egregious misrepresentation by any standard, and I'd consider it downright deceptive to not include queueing time this significant in the reported result. Worse still, this queuing time is nowhere to be found even on their own build results page, so users have 0 visibility into how long their deploys end up actually taking.

To offer a bit more nuance, it's impossible for a GitHub app to actually measure and report the exact end-to-end latency that users experience, since the earliest we can start measuring is when we receive a webhook.

(That is, at least if we want measurements that are resistant against clock drift, which could result in wildly inaccurate, sometimes even negative latency measurements for something like Reflame, since it completes consistently in well under 2s)

Vercel reported a duration of 9s, so was off by 7s.

Cloudflare Pages reported a duration of 33s, so was off by 4s.

For Reflame, the reported 1.071s is probably still off by some amount, but it was below the minimum threshold of measurement possible for this exercise, because the deploy was finished by the time we were able to see commit statuses.

Netlify, on the other hand, reported a duration of 15s, but had an end to end latency of 80s, so was off by 65s. It had plenty of time from receiving the webhook to measure and report the full queueing time, but chose not to in order to make itself look better by reporting only the time spent building.

My hypothesis is this long queue time is the result of an overly aggressive cost/capacity management strategy. In a vacuum, this level of aggressive queuing could be considered perfectly reasonable, especially for users on free plans, as a cost saving measure. But in the real world we live in, there are multiple competitors offering free plans with comparable functionality and practically 0 queuing time (and with Reflame specifically, practically 0 end to end deploy time as well).

So if I were a Netlify user and care about deployment speed, I'd be taking a serious look at the many great alternatives out there if they don't end up addressing this soon. If this is in fact a cost saving measure as I hypothesized, Netlify should be able to do so just by flipping a switch and throwing some more money at the problem to become competitive with everybody else. And if they still choose not to, frankly they don't deserve to have you as a user.

Switching costs should be negligible if you're just deploying a simple client-rendered app composed of a bunch of static assets, and in return you'll shave off 50s+ off your deploys for the rest of the lifetime of the project (or practically the entire 80s if you choose Reflame). Feel free to DM me at @lewisl9029 on Twitter if you need help with this, even if you're planning to switch to something other than Reflame. I just can't stand seeing Netlify continue to have users while offering such an egregiously subpar experience in an area I'm so passionate about.

For the record, across all of the dozens of times I ran this test, Netlify always exhibited similar performance characteristics, and never even once posted an in-progress check before the others have completed.

Again, I invite anyone curious enough to try this for themselves on a new Vite React app to independently verify these results. Especially interested in seeing results from those with paid plans on Netlify, which may not suffer from queueing times to a similar degree. On the $99/member business plan, they sell something they call the "Priority build environment", after all. Not that I'd recommend paying $99/member to get the baseline experience everybody else is offering for free.

These results were especially frustrating if we consider that Netlify could have placed second in this race if it didn't have this ridiculously long queuing time. From the time the status check appeared, it actually only took ~19s to complete the deployment, which would have put it neck to neck with Vercel. Instead, because it took 50s+ to even start, it finished dead last by a hilarious margin.

Parting words

Finally, allow me to end on this rant:

The term "instant" has lost all meaning in the deployment tooling space. Every service tested here, except Reflame, currently uses the term "instant" to describe their preview deploys:

This is especially ironic because as we've seen with this exercise, only Reflame is actually fast enough to offer an experience that is truly "instant" in the only useful definition of the word: an experience fast enough to never leave you waiting, even for a brief second.

More and more, I've been intentionally avoiding nebulous terms like "instant" to describe Reflame's deployment speed. Instead I like to present it in terms of cold, hard numbers: 100ms - 500ms from the VSCode extension, 0.5s - 3s from the GitHub app.

Not only is this more honest and easier on my conscience, I find that it connects with users on a more visceral level, and better piques their intellectual curiosity around how Reflame deploys so quickly, which is a great way to get them in the door. Most importantly though, it helps me remind myself that there's always room for improvement.

Vite's update latency for local development is best measured in 10s of milliseconds. Reflame deploys to the internet on every update for its shareable, local-dev-like, HMR-enabled Live Previews, so the speed of light in fiber optics means Reflame might never be able to beat it with its current architecture (though I have some exciting ideas for a future version of Reflame that might in the distant future!), but that won't stop me from trying to get it as close as the physical limits of universe will allow.

You don't have to just take my word for that either.

I chose a flat monthly fee per user as the pricing model for Reflame's deployment product precisely to ensure Reflame the business will always be financially incentivized to make your deploys faster, allowing us to serve more customers on the same hardware and pocket the difference as profit. This is in stark contrast to just about every other CI/CD provider out there that rely on usage-based pricing and end up financially disincentivizing themselves from doing anything that could make deploys faster for their users (ask me how I know this). I'll probably have more to say on this topic in the future as well...

... But, until then, really appreciate you spending the time to read up to this point!

If this piece piqued your interest in Reflame, and you're building a client-rendered React app, go give it a try for free at reflame.app! Would love to repay you many times over for the time you spent by making sure you never have to wait for a deploy ever again. 🙂