Reflame now deploys your NPM package updates faster than a node_modules cache restore

And stays just as fast as you add more and more packages

Table of contents

My first order of business with Reflame is to make waiting for deployments a relic of the past.

Ever since the very first MVP, Reflame has been able to meet this bar for most typical deploys users make on a day-to-day basis, usually landing somewhere between:

100-400ms when deploying from our VSCode extension

500-2000ms when deploying from our GitHub app

If you're used to a traditional deployment service that takes at minimum 10s of seconds to deploy even the smallest of changes on the tiniest of apps and only gets worse over time, your first reaction to the claims above is probably "I'll believe it when I see it".

For you, dear discerning reader, I have a demo:

Still skeptical? Reflame is completely free for solo hobby projects, so take it for a spin for yourself by signing up at https://reflame.app.

Talking just about typical deploys doesn't paint the full picture, however.

There were two notable edge cases I've always been acutely aware of in Reflame that were distinctly not free of waiting.

Over the past few weeks, I've made substantial improvements to both of these edge cases, but today's post will be focusing on the one that will have a much more noticeable effect on the day-to-day quality of life for most Reflame users:

Updating NPM packages.

Some quick spoilers on the other edge case since I can't help myself:

Deploying a ton of brand new modules at the same time also used to scale very poorly.

The compute efficiency of Babel left much to be desired, heavily bottlenecking us to the point of almost linear latency scaling with the number of modules being deployed. I.e. if deploying a single 500ish-lines TSX module took 300ms, deploying 100 modules is likely to take somewhere in the ballpark of 20 seconds.

Because Reflame is really good at never doing the same piece of work twice, this ended up being an extremely rare edge case in practice, basically only limited to:

When importing an existing repo as a new Reflame app. All/most modules in the repo are new to Reflame, so work needed to be done for every one.

When making large-scale refactors through codebase-wide search & replace, or third-party refactoring tools. All of these changed modules are new to Reflame as well, so work needed to be done for each.

When we need to invalidate Reflame's transform caches due to changes in our transforms/caching logic, which is still happening a bit more frequently than I'd like. Every time we do this, new work will need to be done for every module in every app.

I ended up switching to a custom fork of SWC to reduce the wall-clock-time of compute required for transforming a 500ish-line module from ~100ms to ~10ms, drastically improving the scaling characteristics for deploying tons of modules (most of the wall-clock time is now dominated by network I/O which is a lot easier to parallelize than compute).

Not only that, but doing this also chopped off a good ~90ms from typical single module deploys, making the typical deploys in Reflame even faster!

In the hypothetical example earlier, deploying that single 500ish-line TSX module would now take closer to 210ms, and deploying 100 similar modules would likely take somewhere in the ballpark of 5 seconds!

I'm saving the juicy technical details and benchmarks for a future blog post. No promises on when though.

The past

Updating NPM packages in Reflame tended to scale in latency with the total size and number of all the NPM packages in the app.

To give you a better sense of the scaling characteristics here:

It could take about 20s to bump the version of a single package in reflame.app, which used about 16 packages at the time (direct dependencies only, not counting transitive dependencies).

Contrast this to about 5s to do the same in a newly-created app with just 1 package.

We cached sets of NPM packages so you never have to wait for the deployment of the same set of packages twice, which is good.

However, the "set of NPM packages" was the only granularity we cached at, meaning any change in an individual package within that set (say, a version bump) will invalidate the cache and require entirely new work to be done for that new set. Not so good.

This approach compares somewhat poorly even to traditional CLI-tooling-based deployment platforms if we consider it in isolation, since caching and restoring directories like node_modules (a very commonplace optimization) can make small updates to large sets of NPM packages much faster than doing a full install of the entire package set.

So why didn't Reflame go with the same approach?

Eagle-eyed readers will have noticed the italics around in isolation when I contrasted the approaches earlier.

While the node_modules directory caching approach makes the task of updating specific NPM packages fast in isolation, it's important to take into account the end-to-end latency contributed by the very act of saving and restoring the entire node_modules directory on every deploy.

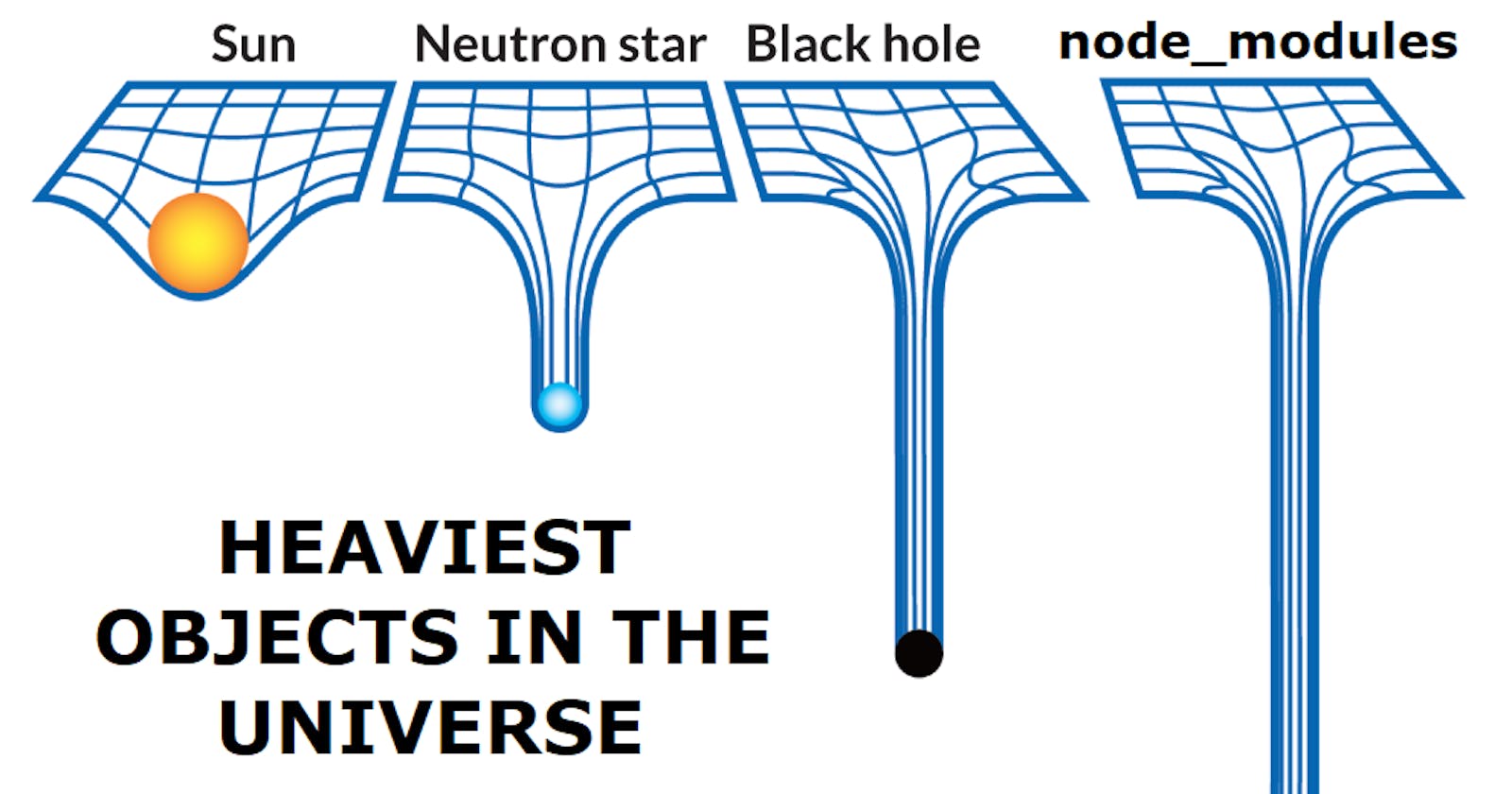

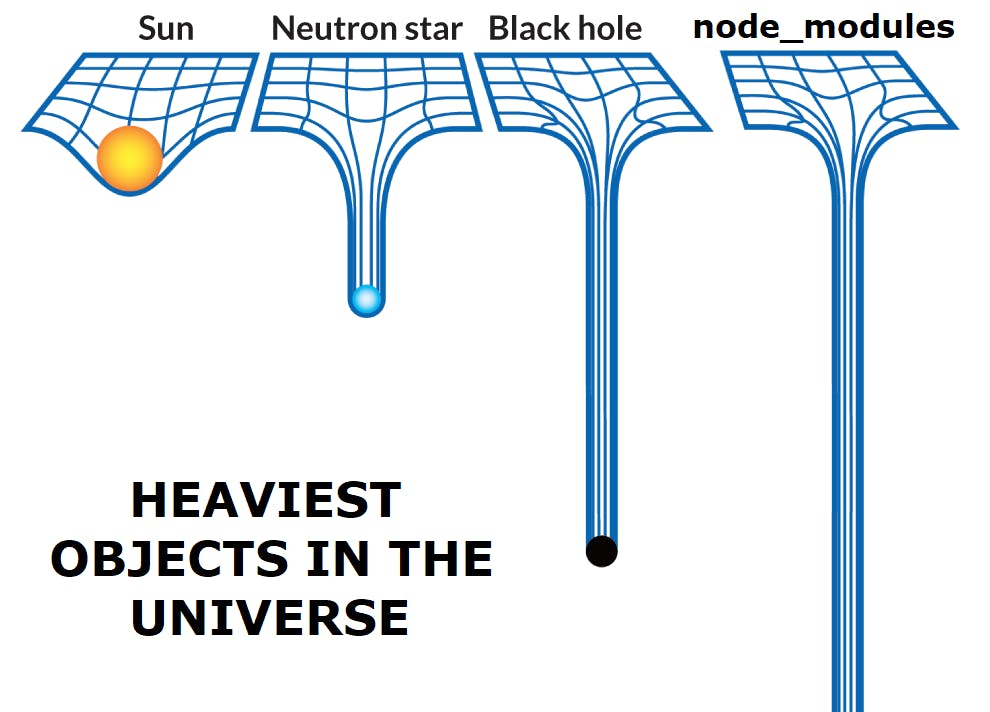

We've all had a nice chuckle at this meme before:

To anyone who's ever tried to make package installs fast, the size of node_modules is no joke. I'm sure most of us can find a couple of node_modules folders with sizes in the low single-digit gigabytes if not worse.

This means just the very act of caching and restoring the node_modules folder itself can take 10s of seconds as we add more and more dependencies, before we even begin to work on actual updates.

This eventually imposes a latency floor of 10s of seconds on every single deploy, even ones where only a tiny handful of app source modules are changed, and no NPM packages are even updated (by far the most common deployment scenario), just to make the occasional NPM package update faster. Not a great tradeoff if you ask me.

Everybody takes latency floors like this for granted today, but Reflame needed to do better if we wanted any chance of achieving our goal of 0-wait deployments, even just for the typical use case of updating only a tiny handful of app modules.

So, the rather naive solution I came up with in the Reflame MVP had the fairly obvious downside of doing a lot more work than necessary when a new set of packages is encountered, even if that new set is almost precisely identical to a previously seen set, differing in just a single version number of a single package.

But it was simple and quick to implement (important because I had already spent more than half a year burning through my savings to work on the rest of Reflame full time at that point), and could preserve the most important property of the deployment system I was trying to build: decoupling the speed of typical deploys (i.e. ones that don't update NPM packages) from the size of your NPM packages.

The idea was, in exchange for having to wait a bit longer when updating NPM packages, you don't have to wait at all when deploying anything that doesn't involve a change in NPM packages.

That was a tradeoff I was much more willing to accept in the short term, and judging from the absence of complaints from early users about having to wait for NPM package updates, thankfully it looks like I made the right call there.

But clearly, we still had a lot of room for improvement here, and anything that even has the potential to scale to 10s of seconds in latency deserves a place on our roadmap if I have anything to say about it.

The present

As of the time of this tweet:

I'm proud to announce that this optimization has been knocked off of our roadmap and into production.

Now, the latency in updating NPM packages only scales with the size of the packages (and downstream transitive dependencies) actually being updated, instead of with the size of the entire set of packages used by the app.

In practice, this means bumping versions of individual packages will generally take somewhere in the ballpark of 1-5s, depending on the sizes of the packages being updated. Check out the demo in the Tweet to see it in action.

This is much faster than even the minimum latency floors on other deployment services under very ideal circumstances.

We did this by making our caching logic much more granular. It now operates on the level of individual package versions, instead of on the level of entire package sets.

This means when you bump a package version, Reflame only needs to do work for the new version of the package you bumped to (and any new versions of its new transitive dependencies), instead of for the entire set of packages specified in your config.

A cool bonus of the new granular caching system is that if you revert to a previously used package version, that deploy will take the fast path using the cache metadata and complete in under a second flat, since work has already been done for that version, even if you have updated other packages since.

An even cooler bonus, coming from the fact that the NPM package cache is shared across all Reflame users, is when users make use of any package that other users have used before (e.g. React, which is used by every Reflame app by default), Reflame will take advantage of the shared cache to skip work and make your deploy even faster, even if you have personally never used the package before.

Lastly, this was true before as well, but it's useful to mention that if you use our VSCode extension, preview deploys using our GitHub app will usually take the super-fast path regardless of what changes you make, because those changes will likely already have been deployed by our VSCode extension by the time you push them up (with this change, even most NPM package updates complete faster than the typical git push, let alone source module updates that usually complete in under 400ms).

The future

So, NPM package updates in Reflame today is now faster than in any other deployment system out there. That doesn't mean we're done with improving it though!

For one, the shared package cache is designed to take advantage of the built-in network effects of Reflame as a SaaS by making package updates faster for everybody the more users use it. Even if we don't make any changes from our side, package updates will get faster for everybody over time as more packages are requested on it.

You can also imagine how we could help it out by listening to the firehose of updates on the NPM package registry and preprocess every new module the moment it gets published. This would lead to effectively 0 cache misses for new packages, meaning every package update will be ridiculously fast, all the time, for everybody (at least until we have to evict the least often used packages from our cache).

This requires throwing more money at the problem than I can justify at the moment, but is something I'm seriously planning to explore down the line. Exciting times ahead!